kubernetes networking

an introduction to networking within a kubernetes cluster

an introduction to networking within a kubernetes cluster

each pod within a Kuberenets cluster gets it’s own IP ip address.

for this networking pods gets assigned an IP address within 10.244.0.0/16 CIDR block.

kubernetes expects us to configure networking in such a way that:

to address this requirement, most of the kubernetes cluster platforms support this feature out of the box. this includes:

we know that each pods within a kubernetes cluster has it’s own ip address. ❌ but pods are ephemeral, so are these ip addresses. because of this, it’s impractical to use our pod ip addresses directly in our applicaiton as we have to update the ip address with each new spinup of our pod.

to tackle this scenario, we need a way to generate a stable ip addresses of these pods. these is where services comes in play.

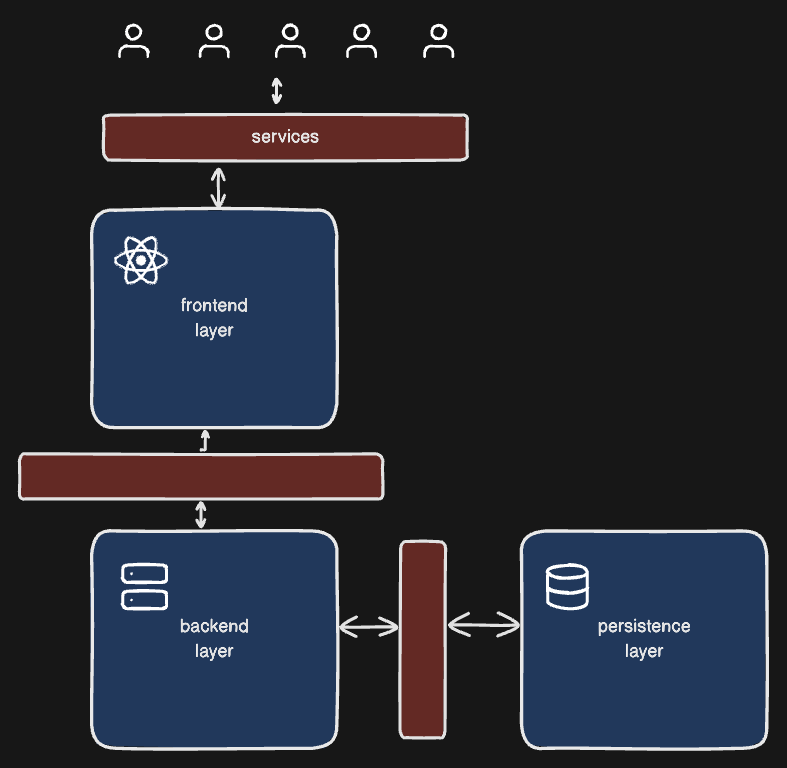

these features enables communication between various components within inside and outside of the application.

tldr; it enables communication

aside from the underlying infrastructure, kubernetes services enable communication between various components within and outside the application.

services help applications communicate with other applications or users.

let’s get into some of the common services in kubernetes:

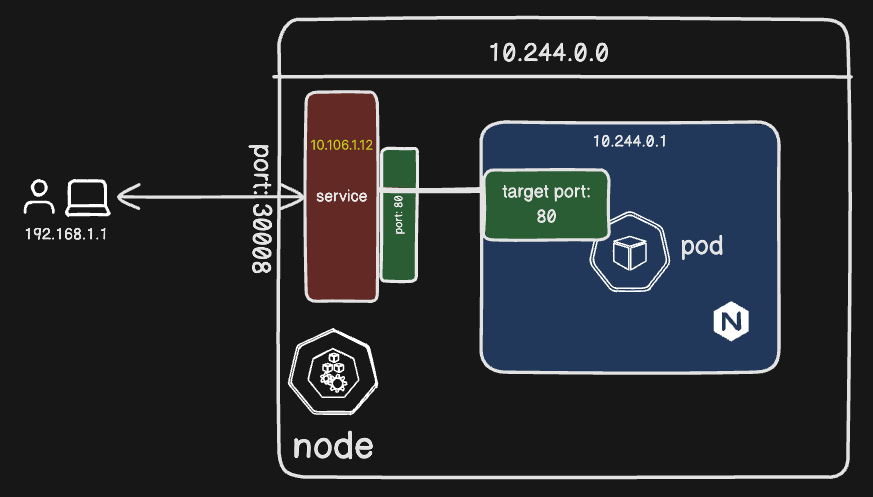

provides external access to the application by mapping port on the node -> port on the pod.

service.yaml

apiVersion: apps/v1

kind: Service

metadata:

name: myapp-service

spec:

type: NodePort

ports:

- targetPort: 80

port: 80

nodePort: 30008

selector: # links to the pod with the labels

app: myapp

type: frontendfor this example, let’s assume we have a pod with this definition:

pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

type: frontend

spec:

containers:

- name: nginx-container

image: nginxafter applying our service using kubectl apply -f service.yaml,

we can check the avaialble services using kubectl get services

we can send a GET request to access the pod using the command curl http://10.244.0.0:30008

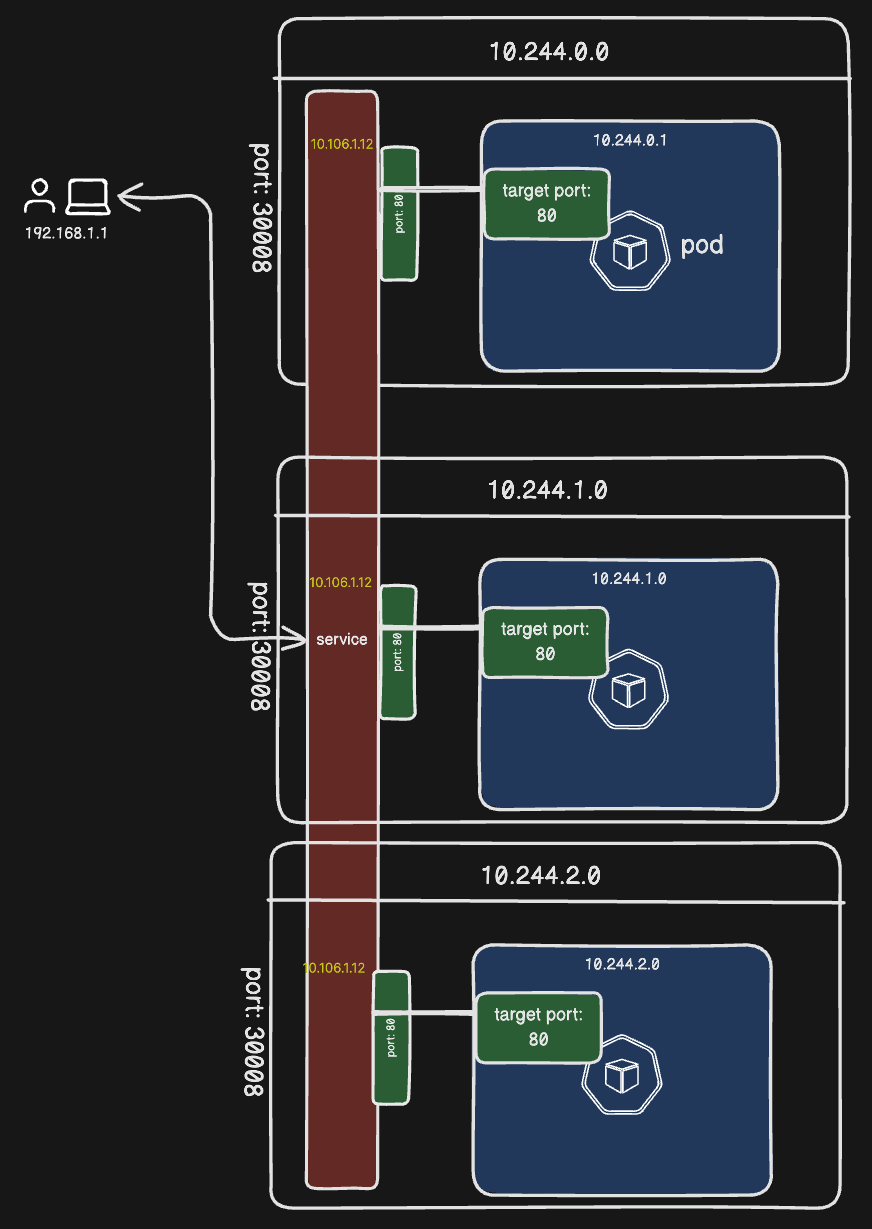

This is how we mapped a service to a single pod. But in production, we might have multiple instances of application running for high availability/load balancing. But these replicated instances will have the same labels. In that case, the NodePort service will automatically loadbalance the request to an instance using random algorithm algorithm. That’s loadbalancer out of the box.

What if, the pods are distributed accross multiple nodes? In this case, kuberenetes automatically spans out across all the nodes, and re-routes our traffic accordingly. No matter to which node the request is sent! OUT OF THE BOX 🤯.

if service type isn’t defined, it defaults to ClusterIP service

ingress service to route traffic from outside cluster to respective pods and viceversa.service.yaml

apiVersion: v1

kind: Service

metadata:

name: backend

spec:

type: ClusterIp

ports:

targetPort: 80

port: 80

selector:

app: myapp

type: backendwe can also create a service using imperitve definition: kubectl create service clusterip backend --tcp=80:80

to expose a pod, we can use the command kubectl expose pod httpd --port=80 --target-port=80

for this example, let’s assume we have a pod with this definition:

pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

type: backend

spec:

containers:

- name: nginx-container

image: nginxupon creating the services using kubectl create -f service.yaml, we can see the backend service is running usign kubectl get services.

Let’s come into the user-facing service.

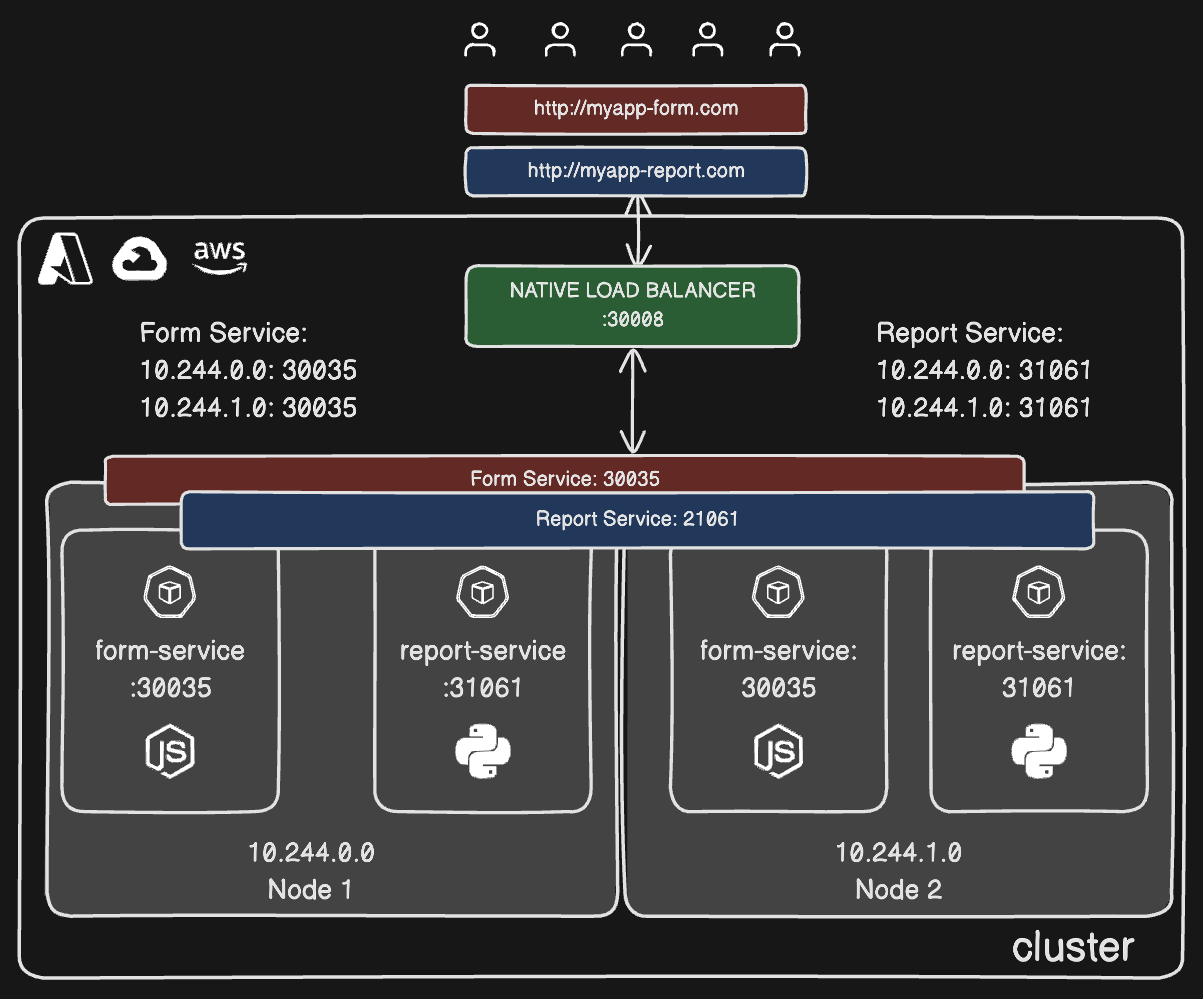

Suppose we have two backend services Form Service & Report Service replicated across multiple pods and on multiple nodes.

How can we access these services from the frontend/client application? We need a way to route the requests to respective services and also try to balance load across these replicas.

For this, we can setup a entirely separate service like nginx and setup routing to respective nodes. But, what if we can configure this within kubernetes itself. That would make our workflow more efficient.

With kubernetes, we can create the loadbalancer using the Native Load Balancer provided by the popular cloud platforms like AWS, AZURE, GCP etc.

apiVersion: v1

kind: Service

metadata:

name: myapp-service

spec:

type: LoadBalancer

ports:

- targetPort: 80

port: 80

nodePort: 30008